Check Database Integrity

The Check Database Integrity task performs internal consistency checks of the data and index pages within the database.

Shrink Database log files

The Shrink Database task reduces the disk space consumed by the database and log files by removing empty data and log pages.

Reorganize Indexes

The Reorganize Index task defragments and compacts clustered and non-clustered indexes on tables and views. This will improve index-scanning performance.

Rebuild indexes

The Rebuild task reorganizes data on the data and index pages by rebuilding indexes. This improves performance of index scans and seeks. This task also optimizes the distribution of data and free space on the index pages, allowing faster future growth.

Update staitstics

The Update Statistics task ensures the query optimizer has up-to-date information about the distribution of data values in the tables. This allows the optimizer to make better judgments about data access strategies.

Clean History

The History Cleanup task deletes historical data about Backup and Restore, SQL Server Agent, and Maintenance Plan operations. This wizard allows you to specify the type and age of the data to be deleted.

Backup database

The Back Up Database (Full) task allows you to specify the source databases, destination files or tapes, and overwrite options for a full backup.

Maintenance clean up

The Maintenance Cleanup task removes files left over from executing a maintenance plan.

Launch SQL Management Studio

Expand the "Management"

You could choose a single operation to run all the tasks or break them down into segments to be run separately.

Click on "Change" to choose an appropriate time to run the job.

This step is very easy. Choose your time and date you want the maintenance job to run.

At this point, you will choose all the task you want the maintenance plan to encapsulate.

Here you choose the databases. You can also select all databases.

You can also include indexes by checking the "include indexes" option.

Reorganizing indexes will be done on all the database selected. In this stage, you select the databases for this task. You can also choose all databases.

Here you specify the database where the indexes will be rebuilt.

Choose the frequency and files to clean.

Choose the mode of maintenance cleanup.

Getting feedback from the maintenance process is essential because it will indicate success or failure of the process. You can choose to write a report to a text file or email report directly to you.

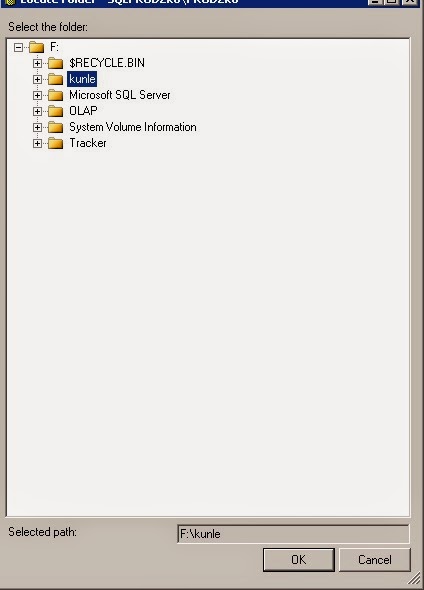

At this point, you can point to a location for storing the report.

The next screen will display a summary of all the tasks to be completed

Once the maintenance plan has been successfully created, a "Success" message appears in the next box.

The next step is to verify the Maintenance Plan.

1. Expand the "Management" menu

2. Expand the "Maintenance Plan".

You should see the maintenance plan in the dropdown list as shown in the image below.

Thanks for reading.